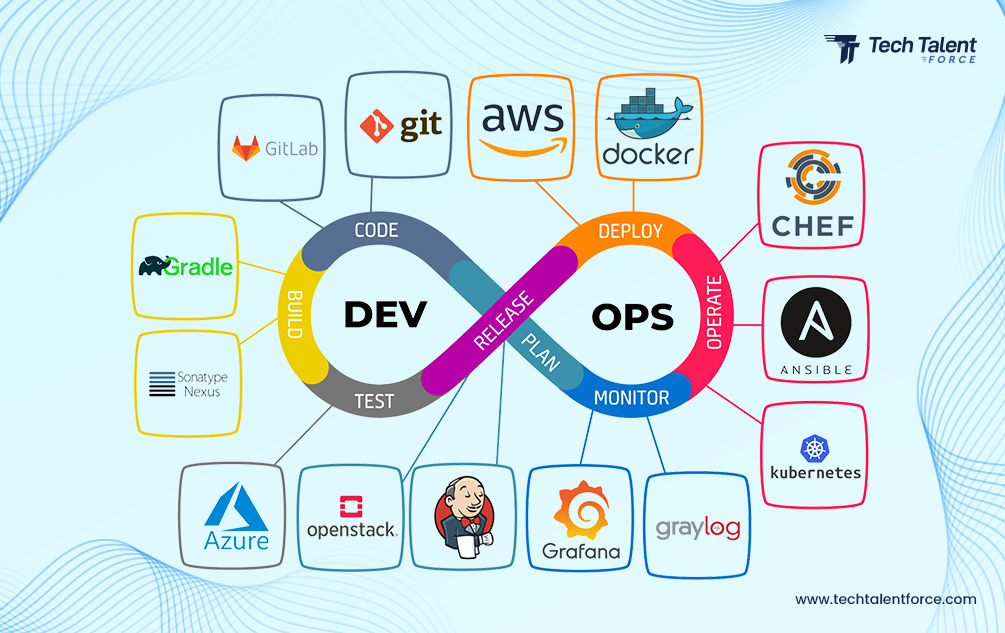

DevOps encompasses a multifaceted set of practices and tools designed to optimize software development and IT operations. Key components of DevOps services include:

Active monitoring sustains high system availability and performance by collecting and analyzing real-time data, enabling proactive issue resolution.

Infrastructure as Code manages infrastructure through version-controlled code, ensuring reproducibility and agility in deployment.

Virtualization and containerization provide isolated environments for consistent application deployment across diverse systems, enhancing portability.

AIOps leverages artificial intelligence and machine learning for intelligent IT operations management, enhancing operational efficiency.

ModelOps extends DevOps principles to the lifecycle management of AI/ML models, optimizing model deployment and performance.

GitOps utilizes Git workflows for declarative infrastructure and application deployments, streamlining development and deployment processes.

Each component contributes significantly to the efficiency, security, and scalability of software development and IT operations, fostering a culture of continuous improvement and collaboration.

DevOps streamlines development and deployment pipelines, reducing time-to-market through automated processes and collaborative workflows.

DevOps service providers offer tailored solutions addressing specific organizational needs, encompassing development, testing, deployment, monitoring, and security.

Access to DevOps experts facilitates the effective integration of automation tools, continuous delivery pipelines, security measures, and monitoring systems, maximizing the benefits of DevOps practices.

By embracing , organizations can enhance operational efficiency, drive innovation, and achieve tangible business outcomes.

DevOps services play a pivotal role in driving digital transformation within large enterprises. Key contributions of DevOps services to enterprise transformation include:

DevOps supports automated infrastructure setup and application deployment, facilitating the adoption of flexible microservices architectures and optimizing infrastructure utilization.

DevOps promotes collaboration across development, operations, and business teams, streamlining software delivery processes and improving overall efficiency.

DevOps methodologies foster a culture of continuous learning and experimentation, enabling rapid adaptation to market changes and customer needs.

Large enterprises can leverage DevOps services to optimize operations, enhance flexibility, and maintain a competitive edge in today’s dynamic business landscape.

Logging services collect, store, and analyze log data from various components, providing insights into system behavior and application performance.

Monitoring services continuously monitor system performance, enabling proactive issue identification and swift incident response.

By analyzing log data and monitoring metrics, organizations gain insights for continuous system improvement and optimization.

Incorporating logging and monitoring services into DevOps strategies enhances operational visibility, promotes proactive maintenance, and drives continuous enhancement of software systems.

End-to-end logging and monitoring practices are instrumental in capturing, analyzing, and responding to system data throughout the software development and IT operations lifecycle. Key benefits of end-to-end logging and monitoring practices include:

End-to-end logging offers comprehensive visibility into system behavior, facilitating thorough performance analysis and issue identification.

These practices enable early identification of anomalies and bottlenecks, empowering teams to address issues before they impact end-users.

Continuous assessment of application and infrastructure health ensures proactive maintenance and system reliability.

Comprehensive logs and real-time data facilitate efficient troubleshooting and root cause analysis, expediting issue resolution.

Implementing end-to-end logging and monitoring practices empowers organizations to maintain system reliability, optimize performance, and deliver exceptional user experiences.

Selecting appropriate log management and monitoring tools is crucial for aligning with DevOps practices. Key considerations for choosing logging and monitoring solutions include:

Ensure that solutions can scale with infrastructure growth, handling increasing data volumes without performance degradation.

Choose tools that seamlessly integrate with existing DevOps toolchains, facilitating streamlined workflows and data exchange.

Select tools with robust security measures to protect log data and ensure compliance with industry regulations.

Opt for solutions that offer customization options to meet specific logging and monitoring requirements.

Considering these factors empowers organizations to select logging and monitoring solutions that enhance operational efficiency and align with DevOps best practices.

Centralized logging architectures consolidate logs from disparate sources, enabling efficient troubleshooting and analysis. Benefits of centralized log analysis include:

Centralized logs enable real-time issue detection and trend analysis, empowering teams to proactively address potential problems.

Consolidated logs facilitate correlation of events and rapid root cause identification, expediting issue resolution.

Centralized logging supports regulatory compliance by capturing and retaining all relevant logs, enhancing data security and access control.

Analysis of system logs identifies performance bottlenecks and optimization opportunities, improving overall system efficiency.

By implementing a centralized logging architecture, organizations gain comprehensive visibility into system behavior, enabling proactive maintenance and rapid issue resolution.

Responsive monitoring systems with automated alerting and orchestration capabilities are essential for maintaining system health and availability. Key aspects of such systems include:

Automated alerting enables real-time detection of performance issues, enabling rapid incident response and minimizing downtime.

Orchestrated incident response workflows streamline communication and collaboration, minimizing mean time to resolution (MTTR).

Monitoring systems provide valuable data for performance analysis and optimization, supporting continuous improvement efforts.

Integrating monitoring systems with automated alerting and orchestration tools enhances system reliability, minimizes downtime, and optimizes operational efficiency.

Observability principles emphasize the importance of visibility into system behavior through metrics, traces, and logs. Benefits of observability include:

Quantitative data points enable performance analysis and capacity planning, supporting proactive maintenance and optimization.

Insights into transaction flows facilitate bottleneck identification and latency analysis, enhancing system performance.

Detailed event information enables troubleshooting and root cause analysis, expediting issue resolution and system optimization.

Leveraging observability principles provides organizations with holistic insights into system behavior, supporting data-driven decision-making and continuous improvement.

Automation of repetitive tasks and data-driven decision-making optimize operational efficiency and resource allocation.

AI/ML models anticipate performance issues and automate incident response, enhancing system reliability and availability.

Continuous monitoring and self-healing infrastructure capabilities optimize system resilience and minimize downtime.

Integrating AI/ML technologies into DevOps practices unlocks new possibilities for innovation and continuous improvement, enabling organizations to stay agile and responsive in dynamic environments.

No Comments